In an era where images and visual content dominate our digital landscape, the ability to manipulate and

personalize these images has become a necessity.

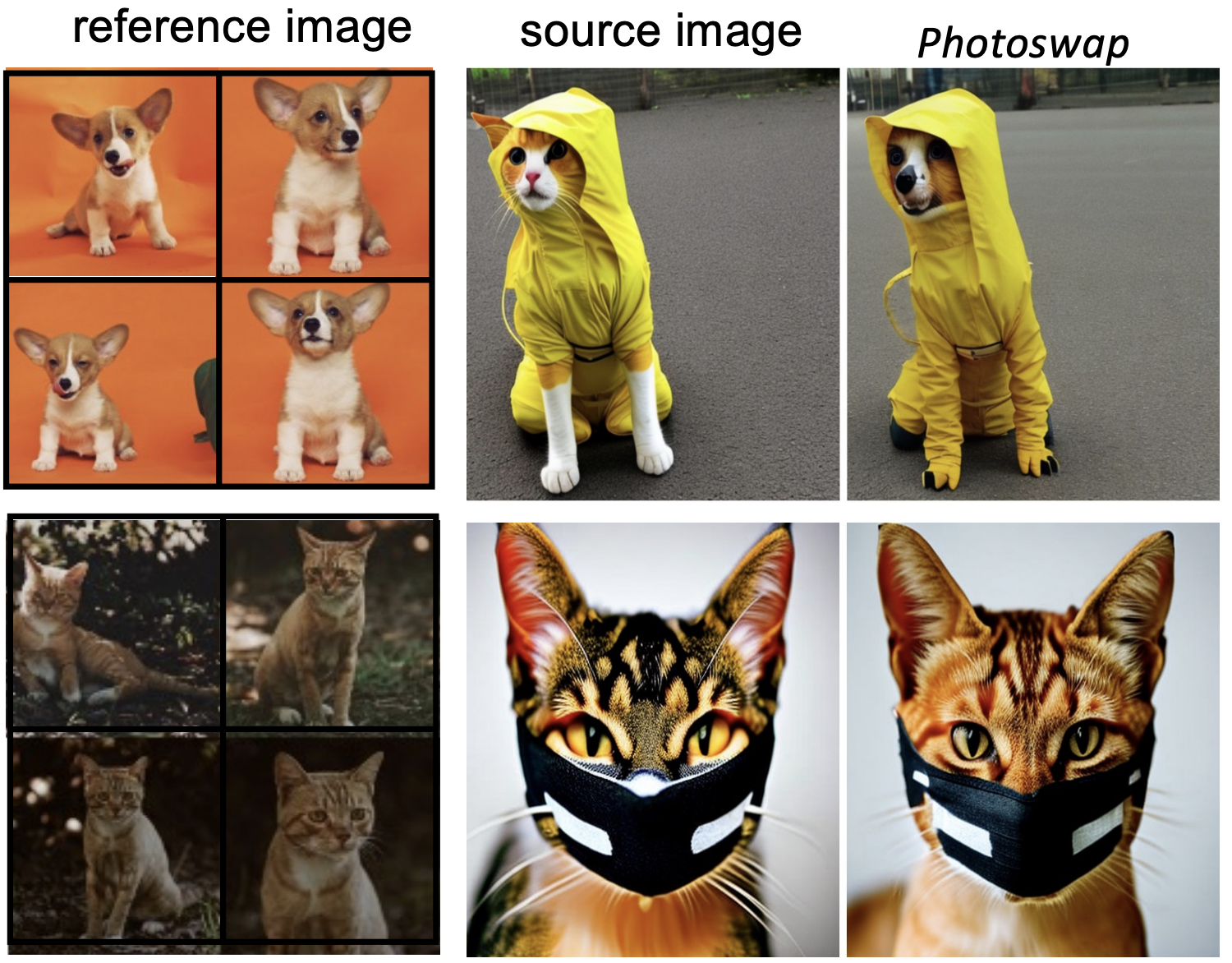

Envision seamlessly substituting a tabby cat lounging on a sunlit window sill in a photograph with your

own playful puppy, all while preserving the original charm and composition of the image.

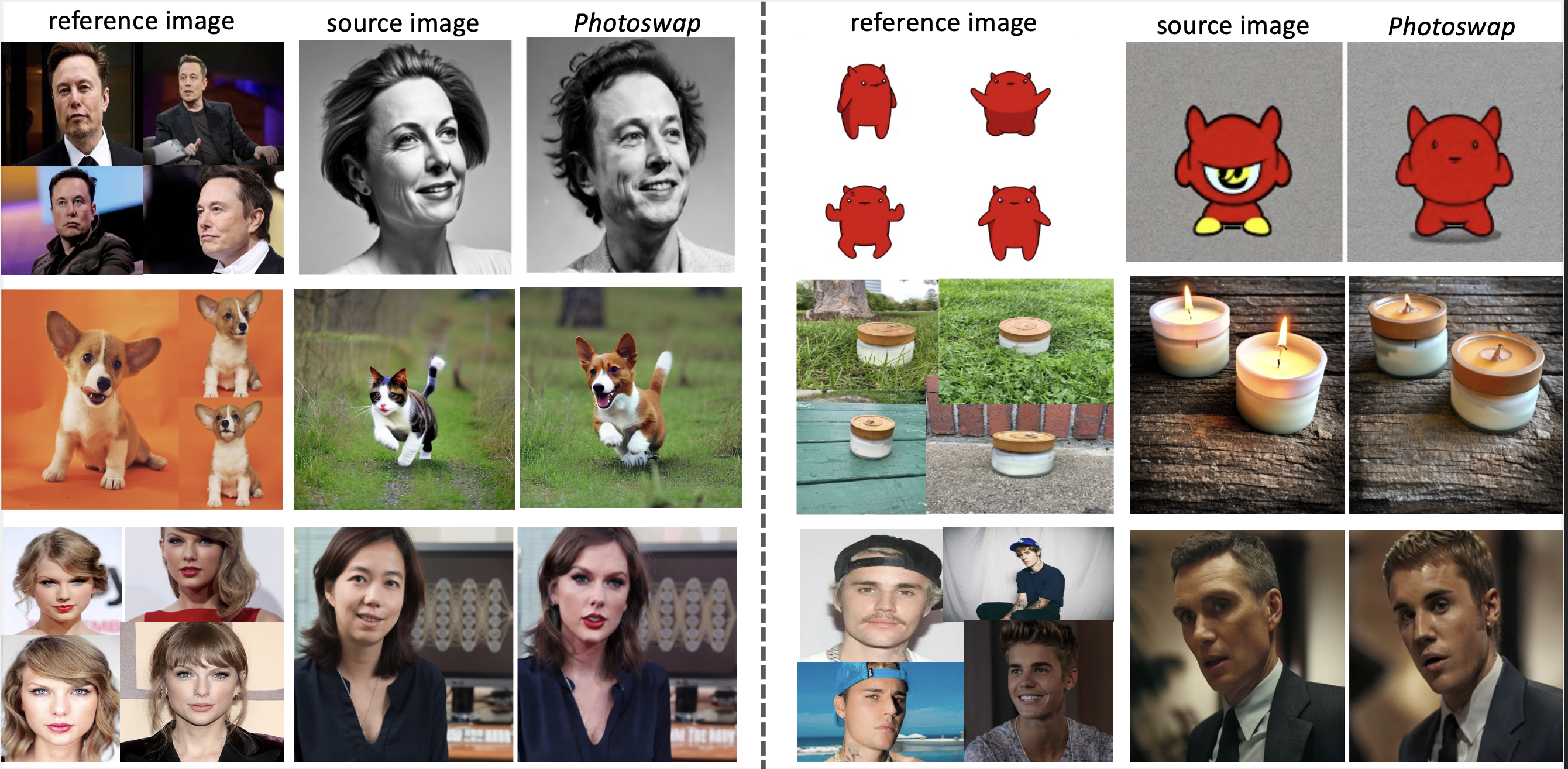

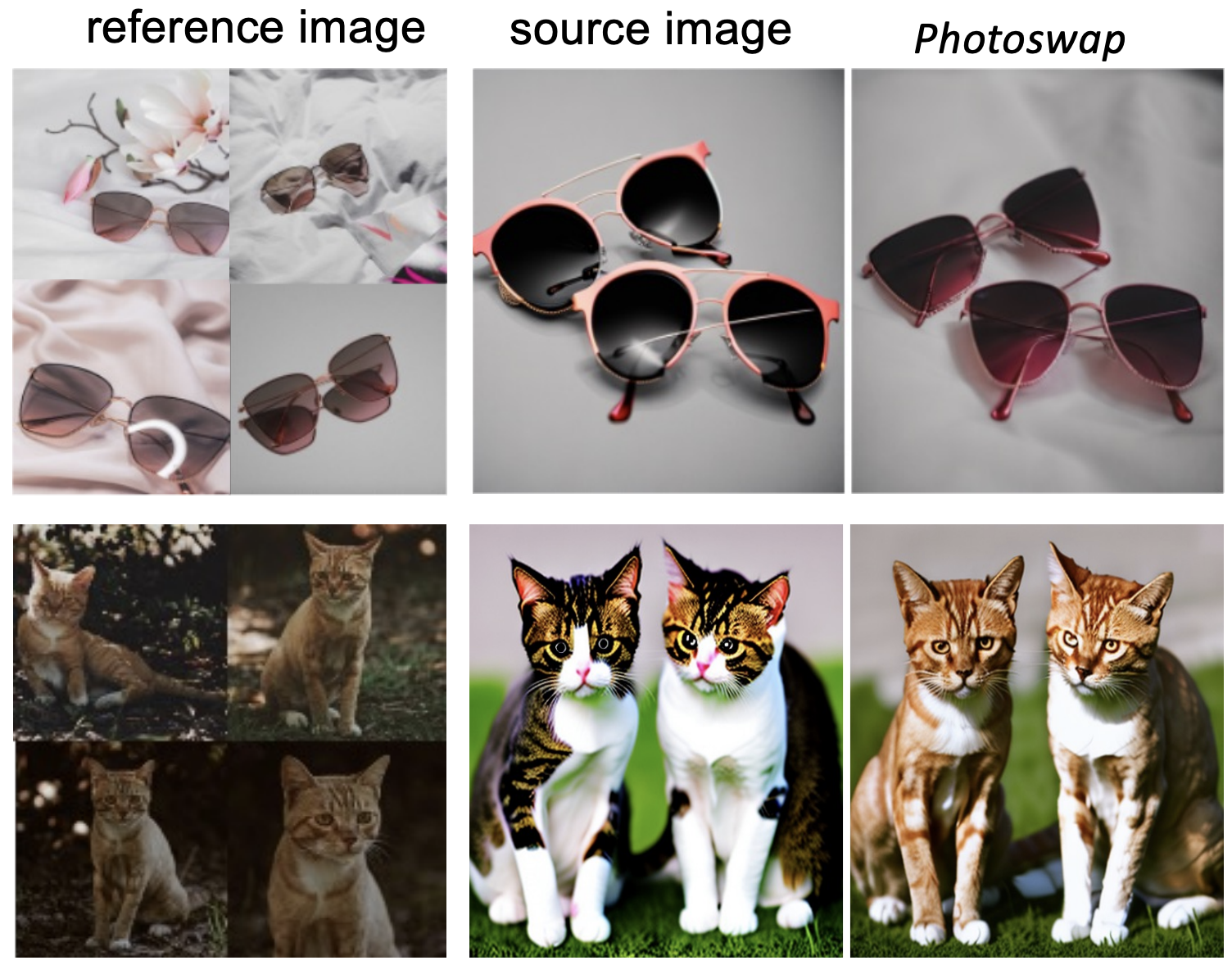

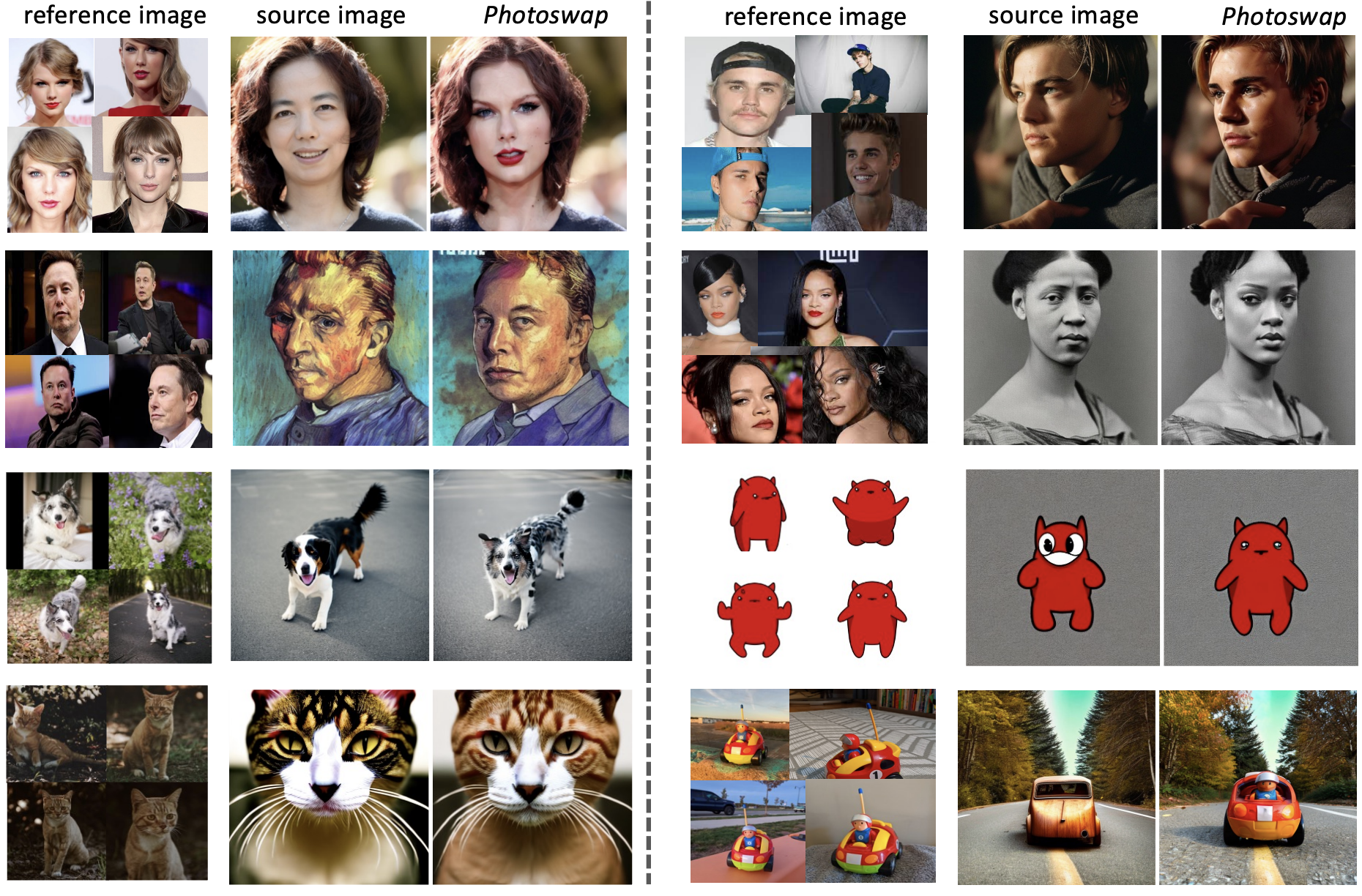

We present Photoswap, a novel approach that enables this immersive image editing experience

through personalized subject swapping in existing images.

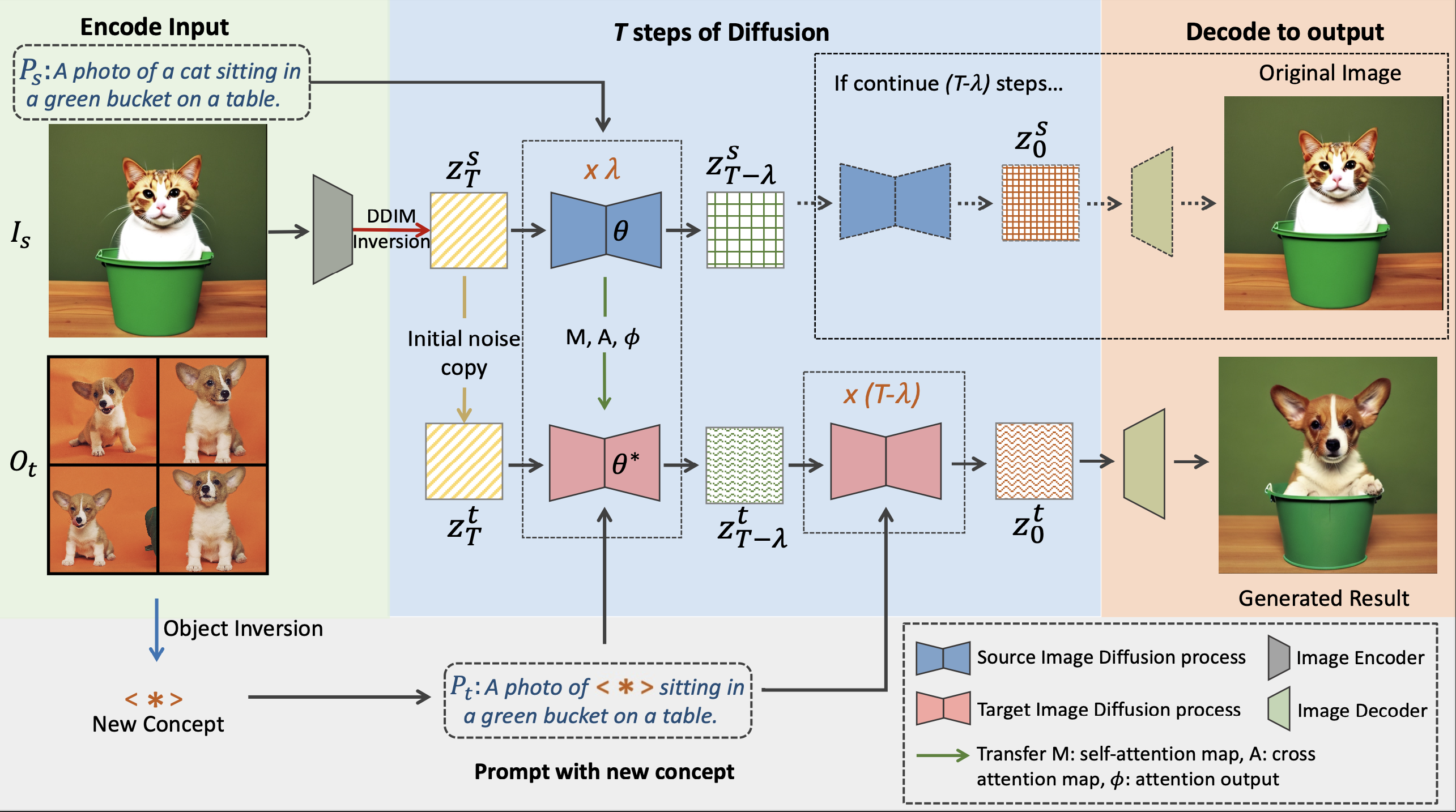

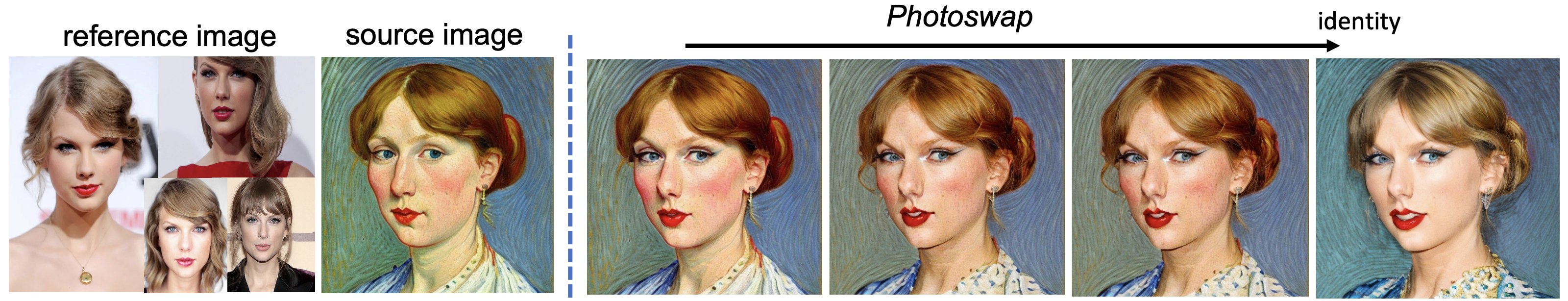

Photoswap first learns the visual concept of the subject from reference images and then swaps it

into the target image using pre-trained diffusion models in a training-free manner. We establish that a

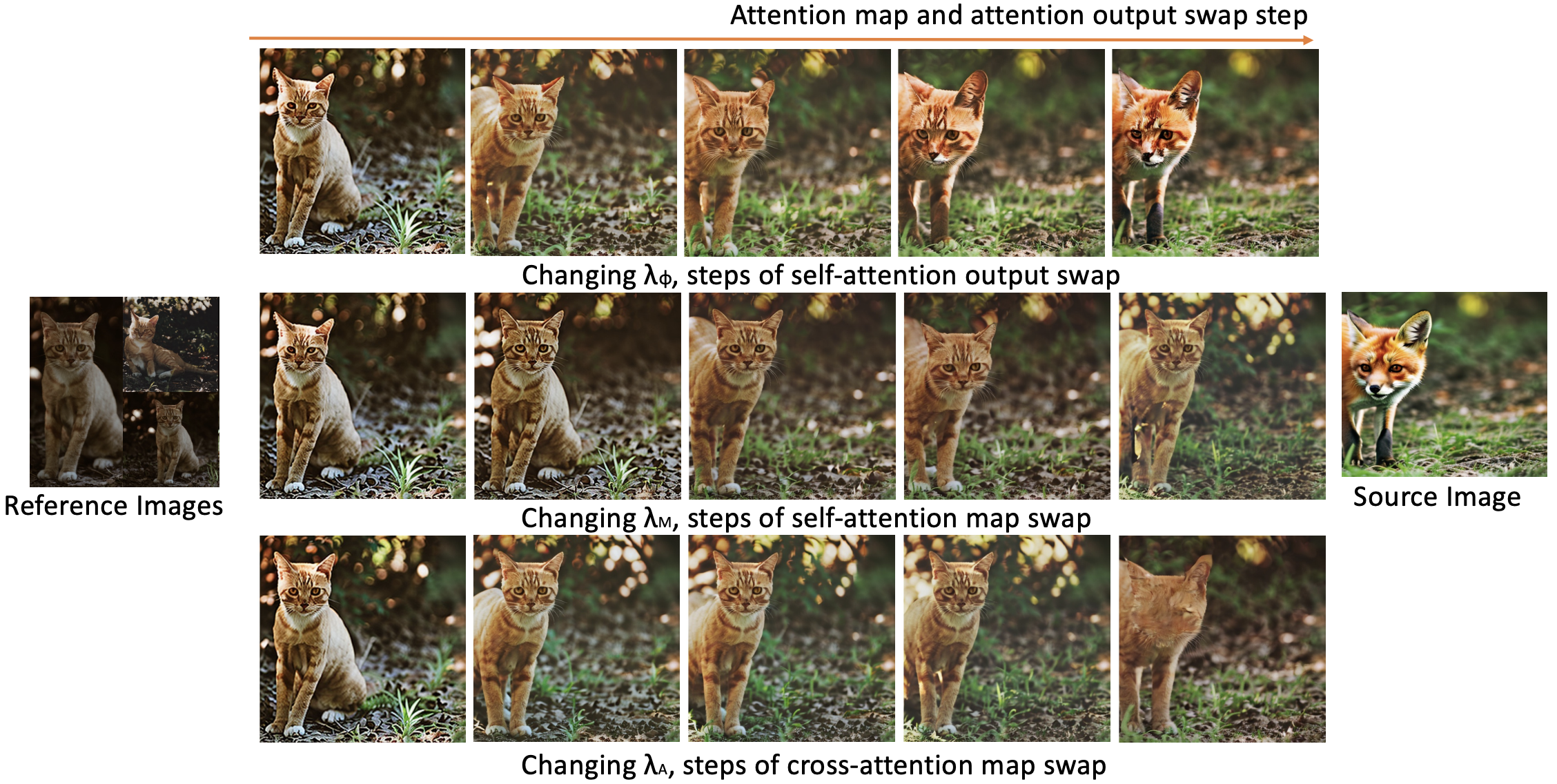

well-conceptualized visual subject can be seamlessly transferred to any image with appropriate self-attention

and cross-attention manipulation, maintaining the pose of the swapped subject and the overall coherence of the image.

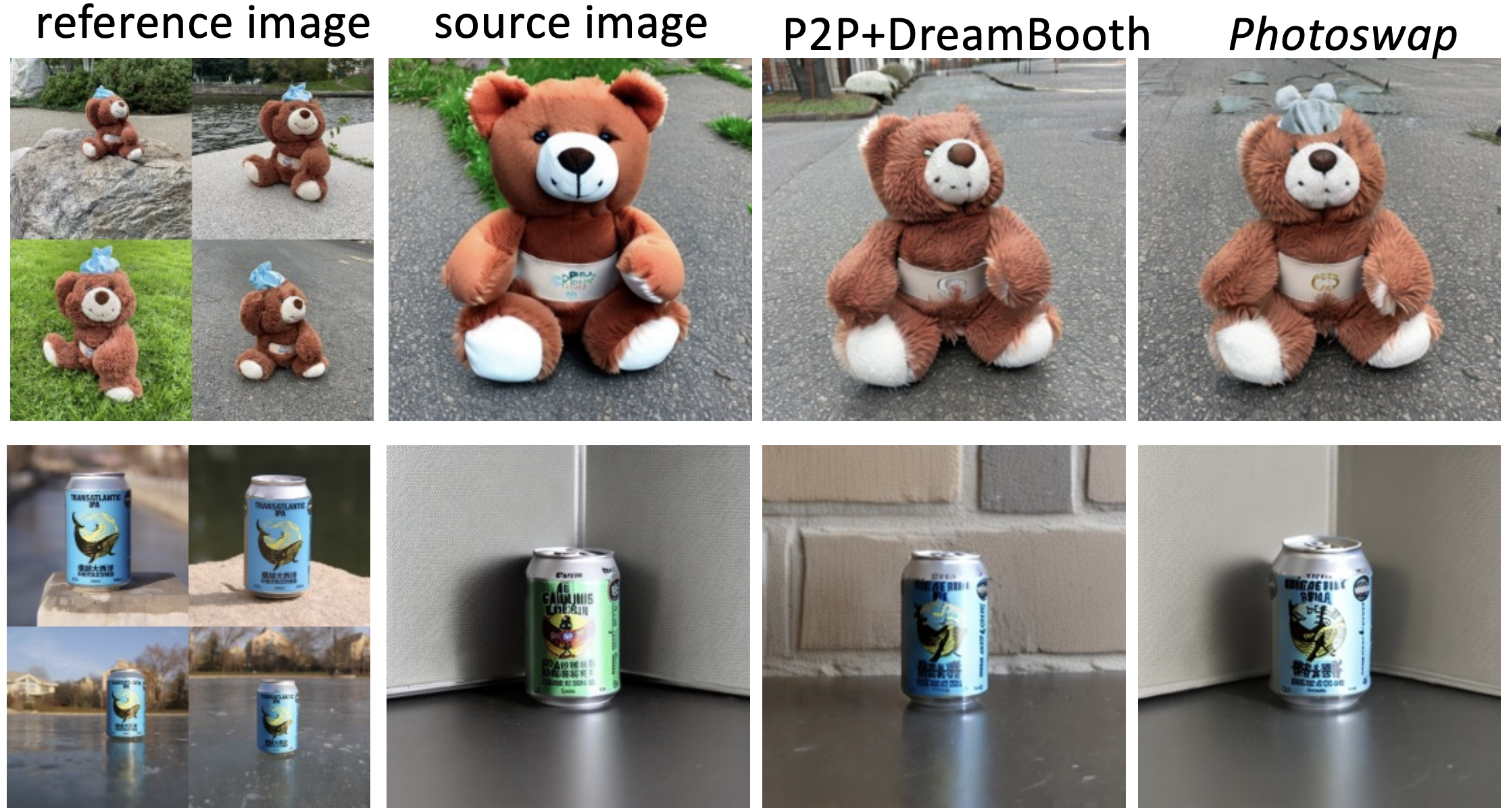

Comprehensive experiments underscore the efficacy and controllability of Photoswap in personalized

subject swapping. Furthermore, Photoswap significantly outperforms baseline methods in human ratings across

subject swapping, background preservation, and overall quality, revealing its vast application potential, from

entertainment to professional editing.